Models:

Introduction

Processing with AI

Now that we have our dataset on Lobe.ai, we can train one of the models available and start making predictions! We saw that the two models ResNet and MobileNet are Deep Learning models.

In this chapter we are going to discover the different kinds of model we encounter in Machine Learning, and their specificities.

Machine Learning models

You already know that AI is a mix between Computer Science and Statictics and that Machine Learning models are mainly based on statistics and logic. We can even talk about "statistical models" to describe Machine Learning models and by extension Deep Learning models.

A statistical model is an approximate mathematical description of the mechanism that generated observations.

Take this definition above, we first have the input data, like physical data or pictures. Then, we have a trigger that would act as the statistical model. And finally as the observations we have an inference, or a price for example.

Now let's think of this definition in a real use case example:

Example

How to estimate a real estate price:

Traditionally:

- We have as input data the description of the real estate with all its specificities. For example, the number of rooms, the surface in square meters, etc.

- Then we have a real estate agent agent that can fix a price depending on the description using his experience and analysing the current market.

- Finally we have the estimated price.

Using a statistical model:

- We have as input data the description of the real estate with all its specificities.

- Then we have a Machine Learning model perfectly trained on all the descriptions.

- Finally we have the estimated price.

Just like a child, a Machine Learning model will learn from experiences to define its behavior in new situations. The past experiences are the training dataset and the new situation is the production dataset composed of data never encountered. This is the difference between the training phase and the inference phase. The training phase corresponds to the training of the chosen model with the collected dataset. The inference phase is the use of the pre-trained model on unknown inputs.

It is therefore necessary to distinguish between a model and a pre-trained model.

- A model corresponds to a system that is capable of self-tuning in order to make predictions.

- A pre-trained model is that same system but this time well-tuned thanks to the dataset it has learned and tuned on.

Depending on the type of prediction we want to make and the dataset we have, we will have to choose a suitable model. Let's see the choices we have in Machine Learning.

Like you can see it in the scheme above, there are three subfields in Machine Learning, three ways to train models:

- Supervised Learning is when we have our labeled-data (the product price, the car consumption, etc.) and that we are trying to figure out what statistically indicates if something matches one label or another.

- Unsupervised Learning is when we don't have those labels.

- Reinforcement Learning is a type of machine learning where an agent learns to make decisions by interacting with an environment and receiving rewards or punishments based on its actions. The prediction of the model impacts data. Which is then used to further predict. And so on. For example, a poker player robot, which would change the state of the playboard by choosing a specific action, like doing an all-in.

Deep Learning is not part of this scheme because it is a not really a subfield but a type of model, even if its name can be confusing.

Then there are four big categories of models in Machine Learning:

- Classification allows to label objects like images. It can be used to recognise a number in an image, to check if a customer is in the group of customers who might unsubscribe. It can even be used to detect a virus based on some other parameters.

- Regression allows to make predictions based on numerical values. It can be used to predict a real estate value based on its specificities.

- Clustering detects patterns in datasets of unlabeled data and tries to group similar data together (similar client types, similar Netflix users, etc.).

- Dimensionality reduction seeks to reduce the dimension of data, tends to be used more and more because of big data. It can be used to detect parameters and classes in large amounts of data. For example customer churn. It also makes it possible to optimize the models by reducing their error rate and a possible overfitting. Because, the more data you have, the higher the risk of low quality data will become, thus increasing the probability of poor predictions.

Different types of models can be used in these categories: Neural Networks, Decision Tree, etc. The difference between these types of models is their structure, the way they adjust. Machine learning models that use Neural Networks algorithms are the most common.

Which model for which use?

The model used depends on the problem we are trying to solve. Dimensionality reduction models are often used during the data-cleaning process, in order to simplify the problem. It is like suppressing columns (variables) in an Excel sheet. Then, let’s say we are building:

- a trash can that detects the type of plastic to recycle it properly. The selected model will be found within the “classifiers”.

- a tool that estimates house prices. The selected model will be found within the “regressors”.

- a marketing tool that sends notifications to similar clients. The selected model will be found within the “clustering” artillery.

However, the data at hand also defines what is possible. If our data:

- have labels. It is possible to do classification.

- don’t have labels. It is only possible to apply unsupervised learning methods like clustering, anomaly detection, etc.

- have a small number of samples. It is only possible to use simple models, otherwise, a complex model like a Neural Network might overfit.

- is only composed of house pictures. One won't be able to use a regression model to predict their price. However, it is possible to use classifiers if data is labeled, otherwise use clustering models.

These non-exhaustive data properties add constraints to what is possible.

During this course, you will mostly use Deep Learning models, like in Lobe.ai and Akkio. So let's have a look at them!

Neural Networks and Deep Learning

Neural Networks

But first, what are Neural Networks? Let's start by stating two things that most people get wrong about neural networks:

- Artificial "neurons" are just a fancy way to describe a programming building block that processes numbers and only numbers.

- We use the term "neuron" only because it has some high-level similarities with a biological neuron.

Let's compare a biological neuron and an artificial neuron also named a perceptron:

Neuron

A biological neuron is a cell, mainly found in our brains, that communicates with other neurons by using chemical or electrical signals. It receives inputs via multiple channels called dendrites and transmits its output (notice the singular here) through an axon that develops into synapses.

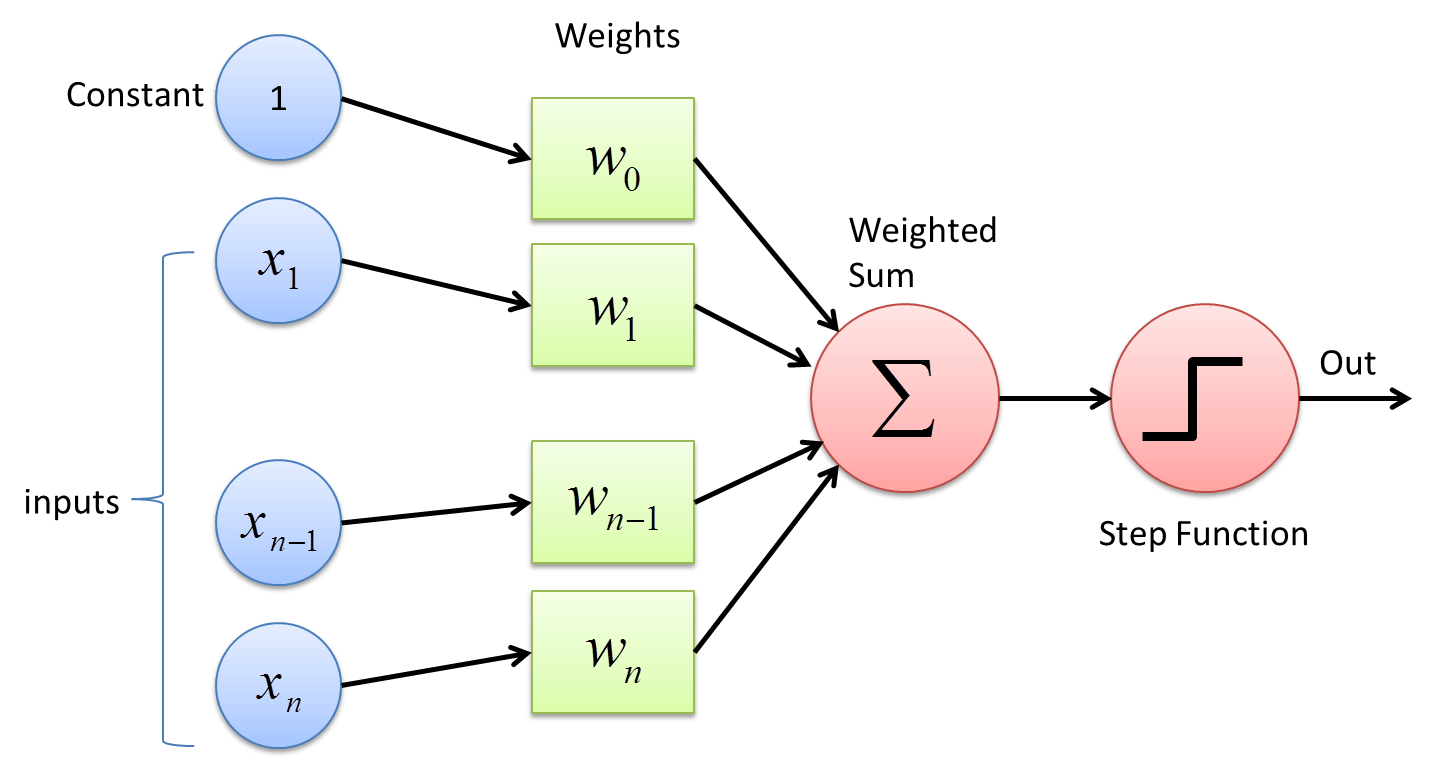

Perceptron

often called an "artificial neuron".

A perceptron takes one or several numbers as input and outputs a category. Each input is given a weight, meaning that a variable represents how each input influences the output. To predict the category, the perceptron will sum the weighted inputs and apply a predefined function (the activation function). Depending on the result of this function the perceptron will output one category or another.

When we say that we are "training" a model, what the algorithm is actually doing is just tweaking every weight to get the best accuracy on the training dataset and trying to minimize the error rate.

You might now see why we tend to call a perceptron an "artificial neuron", they sure share some similarities: they each are a single entity that takes one or several inputs and output one signal depending on its inputs. Except, perceptrons are just maths!

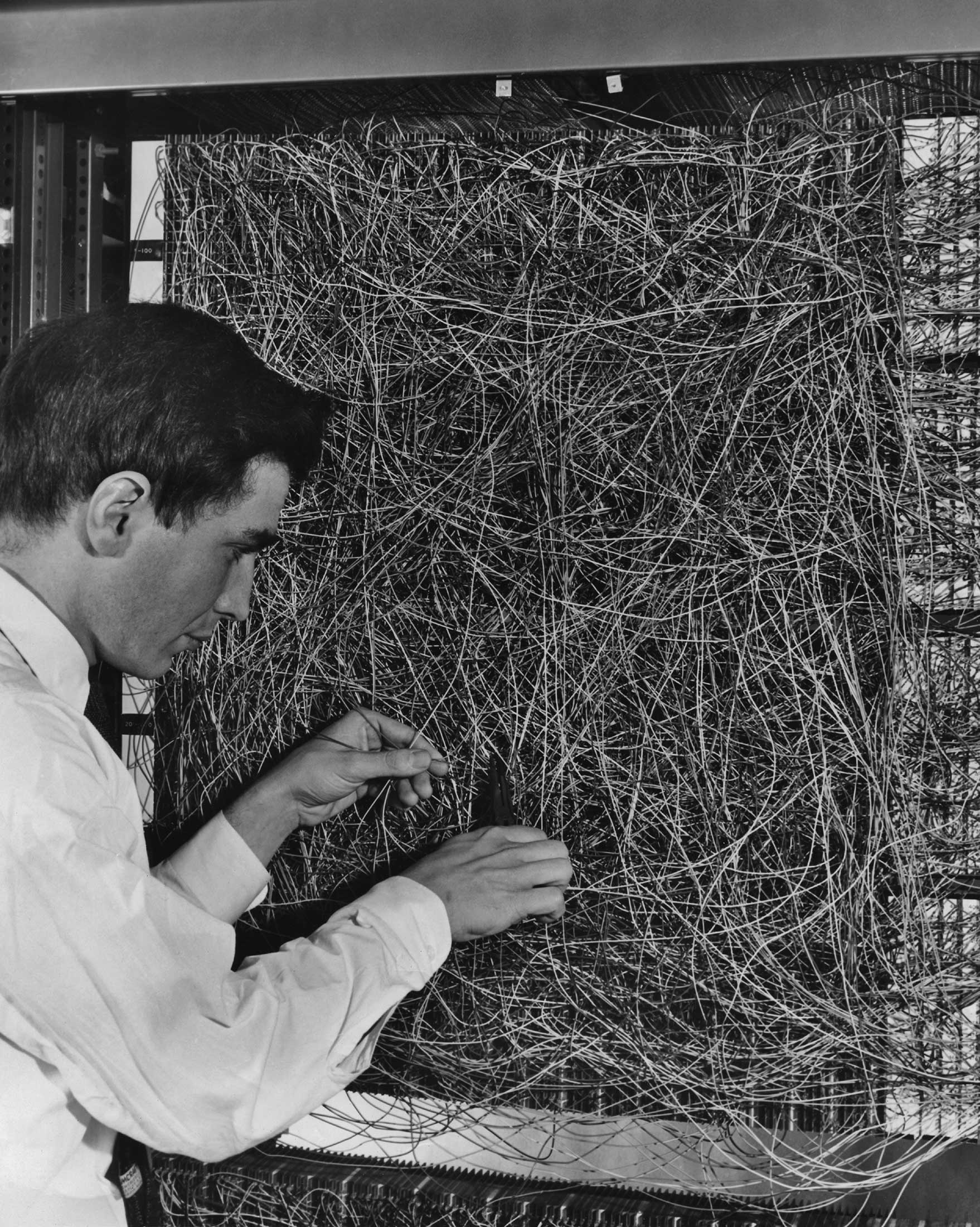

Perceptrons were created in 1957 by Frank Rosenblatt. His first implementation was 100% analog and used motors that turned potentiometers during the learning phase to set weights. It could process 20x20 pixels images, what a beast!

Networking

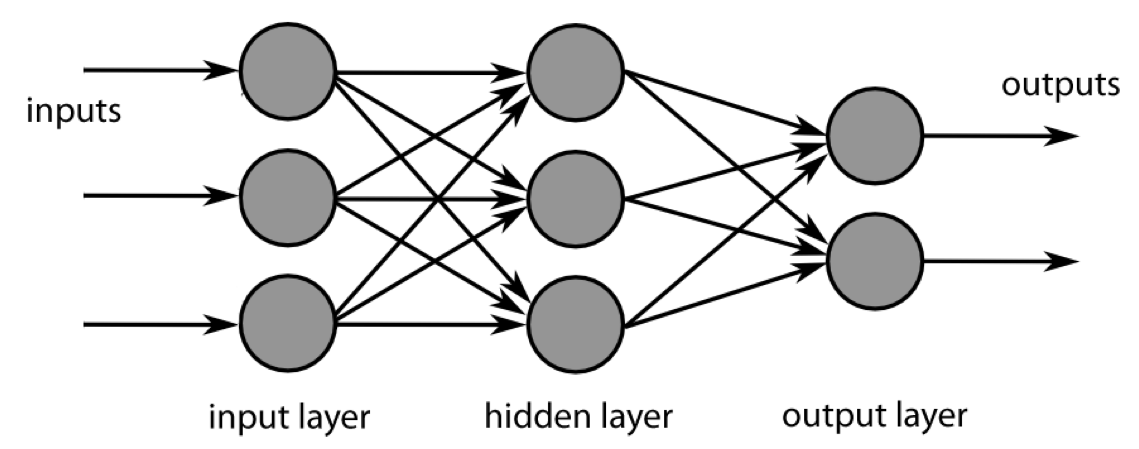

Let's see how to combine perceptrons and create a Neural Network!

A layer that is not an input or an output layer is called a hidden layer.

One of the simplest forms of Artificial Neural Networks (ANN) is the Multi-Layer Perceptron. Multiple perceptrons are simply linked:

- "Horizontally": the input of one perceptron is the output of another.

- "Vertically": the same input is sent to multiple neurons so they can "specialize" themselves in detecting specific patterns.

You can play with an ANN directly in your browser on TensorFlow's Playground to see how adding layers changes the behavior of a network.

You might be wondering how Data Scientists choose the shape of their networks ? Well, that's a great question! For each neuron that you add, that's a new set of weights that will need to be trained, which results in longer training times (and time is money!) and a need for more and more data to train the model. So, from a technical point of view, it involves finding the right balance between complexity and learning time. From a managerial point of view, they have to find the project scale that best allows to optimize performance, keeping in mind time and budget constraints.

In 2019, GPT-2 one of the largest Natural Language Processing (NLP) model have 1.5 billion parameters, it was trained basically on all Reddit posts!

Since the launch of this course, GPT-3 was released, it now contains 175 billion parameters. You can discover some projects based on GPT-3 here.

Since this course update, chat GPT-4 has been released, and is even more powerful. It can even use images as input!

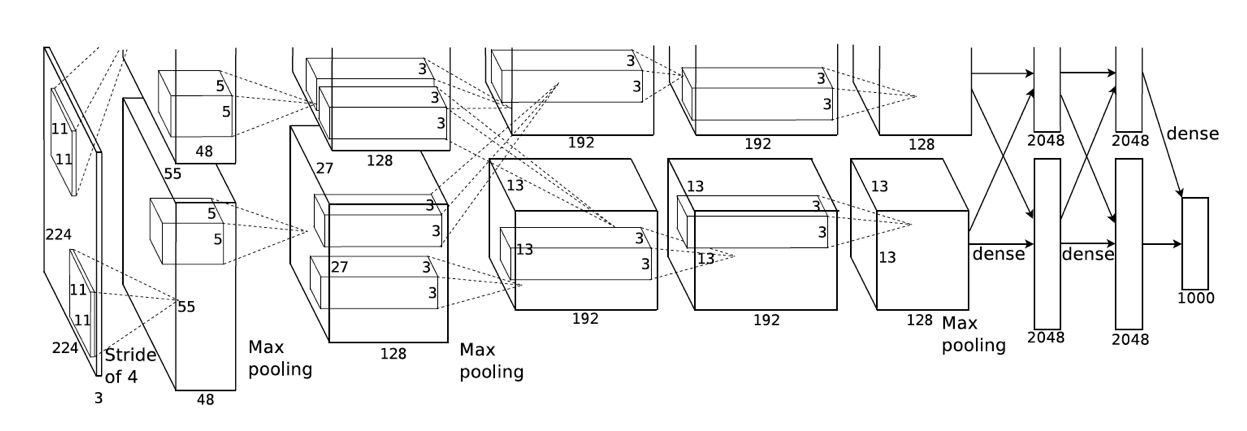

Deep Learning

Deep Learning models are based on Neural Networks algorithms. They are complex multi-layered perceptrons with at least one hidden layer. We call it "deep" because of the multi-layers. It is most often used to process language, speech, noise, writing, images. Indeed, the multi-layers allow to decompose in a hierarchical way the content of a complex data such as voice or image to classify it: identify words for voice or associate descriptive tags to images.

A Deep Learning model is a Machine Learning model that:

- Has a complex architecture, its building blocs and the way it is trained are more complex than a Multi-Layer Perceptron, it includes more than one hidden layer.

- Needs a lot of data and processing time and power to be trained.

Learn how to use Runway, a website that makes anyone able to use state of the art Deep Learning models without coding.

How to train a model correctly?

There are two frequent issues we can encounteer while training a Machine Learning model. It happens when the trained model is biased. It comes from the dataset and the variances inside it.

In this module:

Underfitting

The first issue is the underfitting, it is when your trained-model does not perfom well due to a lack of diversity in your dataset.

Example

A classification model to detect balls 🏀

Goal: we want to train our model to learn what is a ball.

Dataset: our dataset is composed of some pictures of basket balls, soccer balls and baseball balls.

Result: It does not perform well, indeed when you show an apple it detects a ball!

In this example, our model is biased because it has not trained on enough diverse pictures and "think" a ball is just a round object. To correct the underfitting, it is simple, we just need to add more variance in our dataset. It means that we have to work on its completeness by adding more pictures of ball in more different contexts!

Overfitting

The second issue is the overfitting, it is when our trained-model does not perform well on unknown data due to an "over-train" on one dataset.

Example

A classification model to detect balls 🏀

Goal: we want to train our model to learn what is a ball.

Dataset: our dataset is composed of many pictures of basket balls, soccer balls and baseball balls, in a lot of different contexts.

Result: It does not perform well, indeed if you show an apple it does not recognise a ball but when you show a golf ball it does not detect a ball either!

In this example, our model is not working properly because it has been too much trained on certain balls and it knows them "by heart". To correct the overfitting, we need to separate our dataset in two parts. We will go deeper on the overfitting process in the Ethics of AI module.

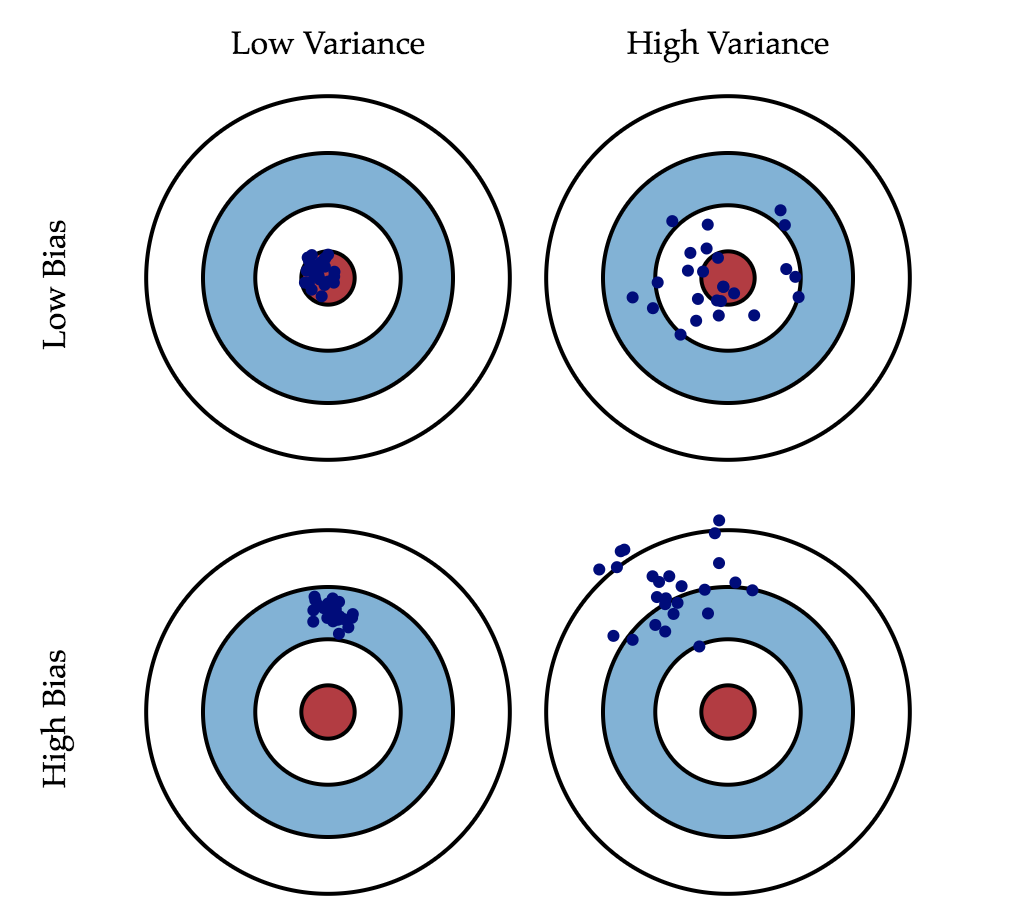

Underfitting happens when the variance is low and the biases high while Overfitting happens when the variance is high and the biases low. Actually, all the difficulty stands in finding the right balance between bias and variance.

We can represente an underfit, an overtfit and a regularized model with three linear regressions:

While the green line best follows the training data, it is too dependent on that data and it is likely to have a higher error rate on new unseen data, compared to the black line.

Measuring a model performance

You ensured the model has a good fit, congratulations! Is it finished? Well, how did you measure the model performance? Did you compute its accuracy? (average good answers). What if it was not enough?

Let’s say we are an insurer trying to build a tool to predict for each day for each client if it will have a car accident. However, a car accident occurs rarely for each person, say once every 10 years. A dummy (stupid) model always predicting that clients will have no accident would be right 365 x 10 - 1 (3649) times over a 10 years period! It seems excellent with a 99.97% of accuracy. But the model missed the single time when the accident happened. You don’t need AI if it is to make the same prediction for any client every day. That is where you need to use a different type of performance measure that seriously takes into account the number of times your model correctly predicted when an accident happened (for example the recall and F1-score).

PROJECT

Let's finish what we started. Now that you know a lot more about Machine Learning models, let's train one on our dataset in Lobe!

Lobe doesn't work on recent IOS devices, but you can use Teachable Machine instead!

- The last step of this exercice is the training part. Watch the video below and train your own model on Lobe.ai!

- Go to the Use tab in Lobe to make your first predictions 🚀

Sum up

- A Machine Learning model must be trained on a dataset related to its end use.

- There are two phases in a project that uses a Machine Learning model, the training phase and the inference phase.

- During this course, we will use Supervised Learning with labelled datasets.

- Lobe.ai uses models that make classification. The results given by the model are categories. On Akkio it is regression. The results given by the model are numerical values.

- In order to choose the right model, it is necessary to have established the precise needs for your project.

- To be functional, a model must be well trained. You have to find the fit point, a good balance between biases and variance.

Before starting the Project module, we must stop on ethics in the next chapter. Undoubtedly it is really important to have in mind the side effects AI can have in our lives.