Datasets:

Introduction

Processing with AI

Before anything else

In a way, data, and more specifically dataset, are the food of machine learning algorithms. We quickly spoke about data in the previous chapter but what are they? Where do they come from? How do we gather them? We will answer all of these questions and more in this chapter. Data and moreover datasets are the base of AI algorithms, and are considered as the first step to an efficient model.

From data

Data are individual facts, statistics, or items of information, often numeric, that are collected through observation.

A data is an information that can come from many different sources: a click on a website,liking a picture on instagram, a temperature variation measured by a weather station or a picture of a dog. As you can see, we are surrounded by data and we spend our time producing new data!

Data can come directly from our use of digital technologies but they can also come from the physical world and are usually collected by sensors, like for temperature variations. For the data to be usable by algorithms, it must be digitized. Of course, there is a lot of data that are not digitized or that have been digitized for specific purposes. There was a time when everything was written down by hand and archived in boxes.

In Computer Science, data as a general concept refers to the fact that some existing information or knowledge is represented or coded in some form suitable for better usage or processing. The process of data, generally, goes through collecting and manipulating items of data in a way to produce meaningful information .

Let's go back a little in history, the use of data has evolved a lot since the arrival of the Internet at home. Around 2002, a revolution took place, nammed Big Data. Indeed, the increasing amount of data was due to the emergence of social networks and more generally to the democratization of the Internet. It has suddenly generated a lot more data than before, requiring more complex infrastructures and more powerful tools to organize and sort all these data.

At the same time, we started to think about the value and the possible monetisation of all these data and their impact in some markets. Suddenly we were able to stalk the behaviours of users on webpages and ajust the interfaces in function of it. Therefore, companies are looking to collect as much as possible data to improve their services and earn money. However, some regulations were put in place and now, each time we open a new website we are asked to give our consent to the owner for collecting our data, our cookies 🍪 .

In Europe, the General Data Protection Regulation law protects european citizens from websites which collect our data to train model and predict a lot of things. For example, which product we could buy (targeting advertising). We need to keep in mind that nothing is free. When you are not paying for a product, you are the product. And more precisely, the data you generate are.

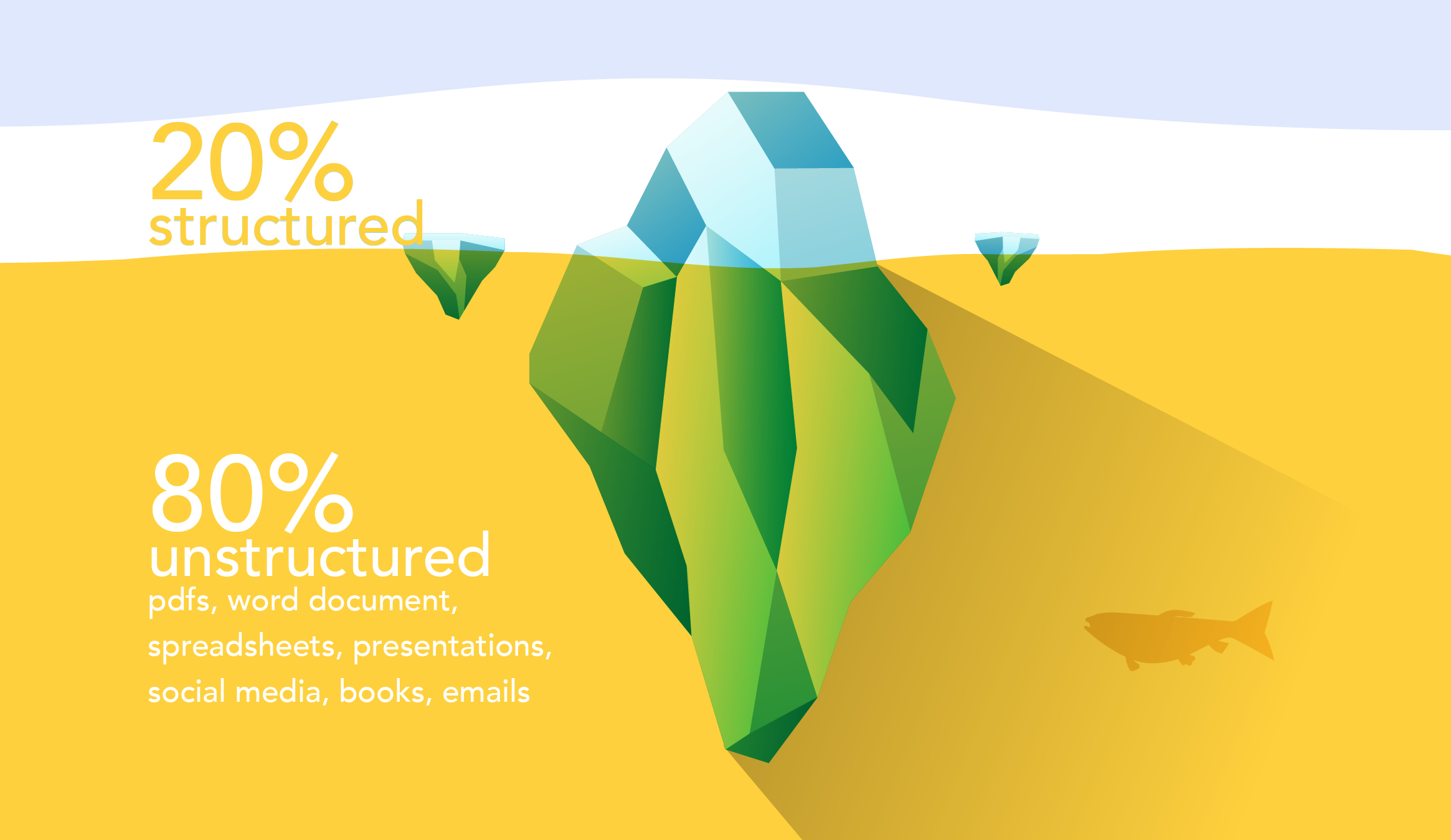

Structured vs unstructured data

What should we consider as data?

Consider an e-commerce website selling shoes. The website has a product catalog. Each product has a name, a description, a size, a weight and a price. Obviously one can expect to have some more information like color or material. Customers will be described with a name, an address, a phone number and an email. Also customers will make orders. Each order is made of a list of items + quantity and a status (paid, delivered...). All this information can be stored in a spreadsheet or in a relational database with formats like numbers, dates and strings. We call this kind of data "structured data". Structured data is easy-to-use. With a simple query, you can for example get the phone number of a customer.

This store receives also everyday dozens of emails from customers. Sometimes customers can ask for information, sometimes they send a complaint. Before reading the email, you have no idea what kind of content the email contains. When you receive an email, the only information you have is the name of the sender, its title and its content as raw text. is what we call "unstructured data". Other examples of unstructured data are images, audio, video or PDF files. Unstructured data are said to be 80% of entreprise data. Using machine learning on unstructured data like emails is a good way for example to identify the content of an email and could allow customer care teams to react more efficiently.

As a consequence, for a company, it is important to list all data that could be available and make sure this could be accessible by data practitioners storing it in data lakes.

To datasets

A dataset is a collection of data.

In the case of tabular data, a dataset corresponds to one or more database tables, where every column of a table represents a particular variable, and each row corresponds to a given record of the data set in question.

It can also be image dataset, or sound dataset. Any type of data grouped in order to train a model in a speficic application.

To train some Machine Learning algorithms we need to gather data in a relevant and/or useful way. This is what we call a datasets. In order to build a good dataset, you will need to label all of your data depending on their caracteristics, thus creating different data groups : different classes. A label is a descriptive information you give to a data. It will allow you to make researchs in your dataset using keywords. It will also allow you to train your model properly by letting it know what it is "looking at" during its training.

Example:

A classification model to detect dog breeds 🐶

We have to tag all our data which are images of all the dog breeds: Labrador Retriever, Spaniel, Boxer... Image databases with already well labeled data exist like ImageNet.

A database usually means "an organized collection of data". A database is usually under the control of a database management system, which is software that, among other things, manages multi-user access to the database and drastically improve performance on very large dataset compared to tabular data softwares.

A dataset usually refers to data selected and arranged in rows and columns for processing by statistical software. The data might have come from a database, but it might not.

In a model training process, we usually use three different types of dataset. Let's use our example use case of dog breed classification to find out:

- The Training dataset, this is the one you use to train your model on and use to make adjustments to it. It must be a database of labeled images. The larger the dataset, the better the training will go. Note that, the size of the dataset will directly impact the speed of the training process.

- The Validation dataset, this one is used to verify if your model is well trained. This one is also made of labeled data, and will be used to verify the quality of the training process. This Validation dataset must be as representative as the Training dataset: with the same diversity of data diversity.

- The Production dataset, composed of new data encountered by our already trained model and for which we seek to find an analysis. It is made of non-labeled data which we will feed our model with during its use.

The Good, the Bad and the Ugly

As we could expect it, there are good and bad data. So when we are creating our dataset, we need to be very careful of four particular things: the accuracy, the completeness, the reliability and the relevance. Let's see a practical example to understand what it means:

Example:

Let's take again our classification model to detect dog breeds 🐶

-

Accuracy

Data must be correct in everey detail: is the information correct? Is our dataset composed of dog pictures only?

-

Completeness

Data must account for all neccesary use cases: is the information comprehensive? Is our dataset composed of all dog breeds?

-

Reliability

Data should not contradict trusted sources: does the information oppose trusted sources? For this example, are our data coming from recent veterinarian pictures? Have the pictures been captured by a camera, or have they been drawn by a child?

-

Relevance

Data should complement use case: are you gathering data with your use case in mind? Is our dataset only composed of dog faces and not other parts of their body? Have the dogs been captured in multiple poses and lighting conditions?

It is really important to keep in mind that a bad dataset will lead to wrong predictions! Even worse, bad data leads to Machine Learning algorithms with unwanted biases. In our example, we won't hurt the dogs too much with a biased model, but think of facial recognition. If a dataset of human faces reflects societial biases, a model trained on those data is likely to be racist! We will go into more detail about the consequences this can have in the Ethics of AI chapter later.

In 2021, a paradigm shift occurred: data scientists moved from striving to build better models (model-centric approach) to better cleaning datasets (data-centric approach). Andrew Ng stated in a not-so-recent interviewthat building a good model is now solved for most business problems, (see scikit-learn library).

Where to find the good ones?

There are some online dataset libraries, Kaggle being the most famous. You can find all kinds of datasets for free there, mostly in English. You will have to search for a dataset on Kaggle for your project, but let's leave that aside for now. In France we have a real developed culture of Open data, mostly public data that you can find on data.gouv.fr.

For image database, we already spoke about the famous ImageNet resource. Here is a list of other dataset libraries:

- Celeba, more than 200 000 pictures of celebrity'faces.

- WordNet, a lexical database composed of more than 117 000 expressions.

- Etalab, a french website that regroups direct links to a lot of public datasets (transports data, geographical data, etc).

When you have found the dataset you need, you will probably have to clean it in order to make it useable for your use case or application. Indeed, you probably won't need all the classes or columns of your dataset. First, you may find some erroneous data or some duplicates. Then you might have to standardize the data format (only numbers for example), check the sources, anonymize the data, etc. And finally, get rid of the potential biases that could come from a wrong sampling. This cleaning step is really important, you will test it below with an image dataset and later in the course with Excel!

We know now that we can find ready-to-use online datasets, but another interesting way to gather data is web scraping. For example, we could search #dogs on Instagram and download all the images under this hashtag to make a first draft of our dataset. However, if you go right to this hashtag on Instagram you might see why this would include quite an extensive amount of cleaning. How many actual picture of only dog can you find? Is the owner on the picture? Is it a real picture or a drawing? There are several ways of gathering data, each with its own advantages and drawbacks, so make sure to keep that in mind for your next research!

For the Lobe project we are going to do, you will have the choice on how you collect your data. You can either take pictures with your webcam directly in Lobe, take pictures and import them, get pictures from the Internet (web scraping) or make a mix of all of these!

I Lobe you

You must follow the instructions given below in order to create your first model, a image classifier.

On certain computers, Lobe.ai is not working properly. Use Teachable Machine instead! It can do the same things, it's free and integrated into the browser.

Let's have some practice and dicover Lobe.ai. Lobe is a free, private desktop application that has everything you need to take your machine learning ideas from prototype to production. It allows you to:

- label your data,

- train a classification model,

- evaluate the results of the training,

- use your trained model,

- and finally export it to a web app.

Two models are used in Lobe, ResNet and MobileNet, two Deep Learning algorithms. Remember this figure:

We will go deeper into the different types of Machine Learning algorithms in the next chapter. Know that these are (very) complex models whose precise functioning is rarely understandable by a human.

PROJECT

-

Start by downloading the app on Lobe.ai, an email address is required to download it.

Lobe doesn't work on recent IOS devices, but you can use Teachable Machine instead!

- We made you a video tour of Lobe's interface, watch it to discover how it works and what you will need for this project:

- You now have to create or find a dataset! You have multiple ways to do it, in the video below, we explain our use case and how we created our dataset to realise our application. We give you advices to make an approriate dataset. For this first exercice, we strongly suggest you to take your own photos, with your smartphone for example.

In the video below, we talk about Computer Vision, it is a subfield of computer science that focuses on giving the computer a higher level of understanding of images (photos or videos). Examples of computer vision research fields are: image segmentation, optical character recognition (OCR), face recognition, etc.

During Part 2: Explorations, you will learn more about Computer Vision by discovering a model named PoseNet.

Sum up

- Without data, no Machine Learning.

- The data are labeled into a dataset.

- From the dataset stage we must be careful to not incorporate biases.

- A dataset must be complete, reliable, relevant and accurate to not be biased and produce insuitable models.

- During the training process of a Machine Learning model, three datasets are used : the training one, the validation one and the production one.

Good, now you should now have a dataset ready to use! Before you start training a model on Lobe, let's read the next chapter to understand what a model is and which one to use in which case!