Transfer Learning with KNN

Processing with AI

In this module, we are going to discover Transfer Learning, a technique whose goal is to reuse the "expertise" of a model for another application. We are going to use MobileNet with an algorithm called KNN to create a custom image classifier.

Who are my neighbors?

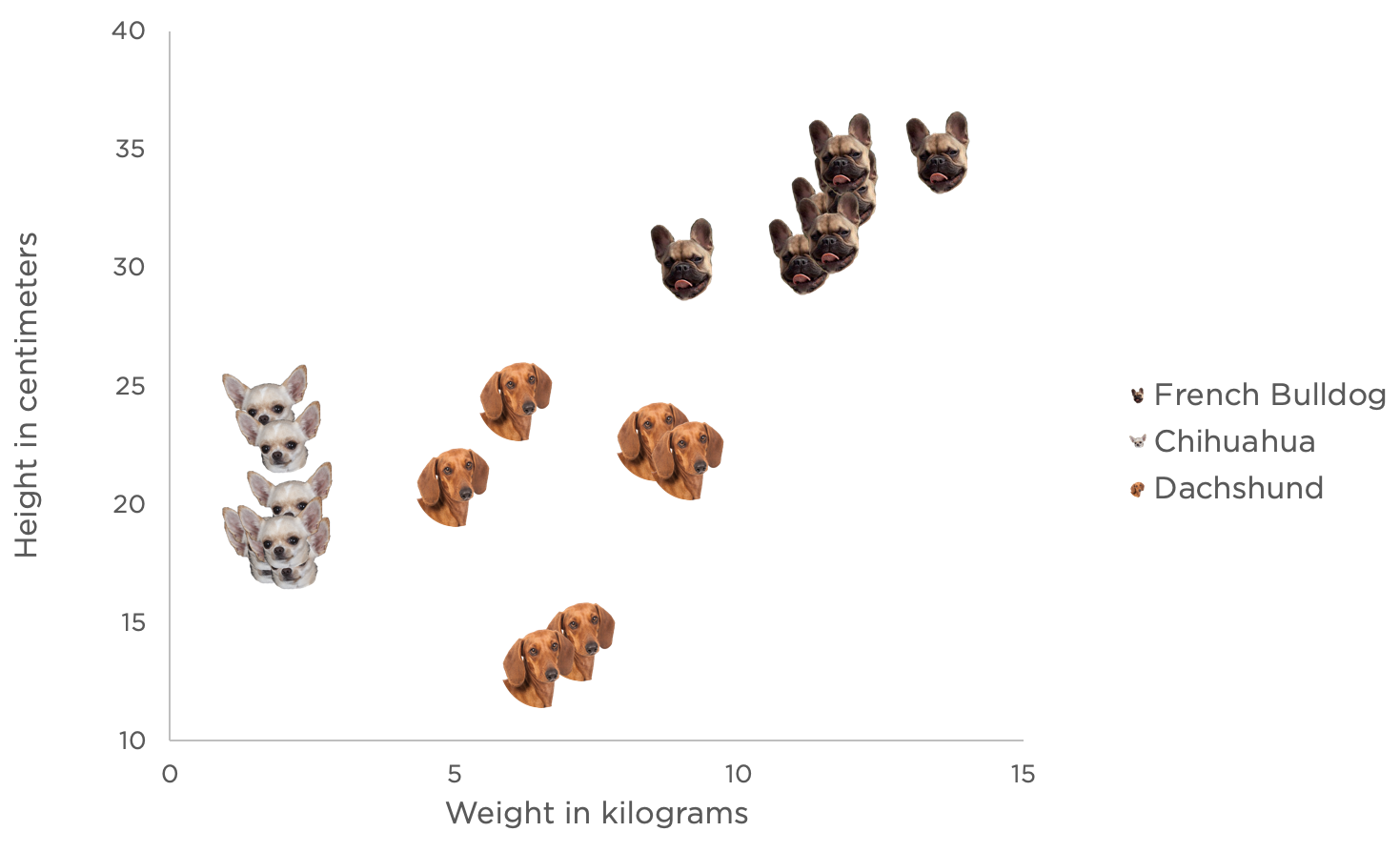

KNN exists for both classification and regression problems. We will use it in this module to build a custom classification model. Let's try to explain the way it works by working on a theoretical case. Let's say we want to build a model able to guess which breed a dog belongs to, given its height and weight.

Let's start by gathering and labeling data about a selection of small dogs.

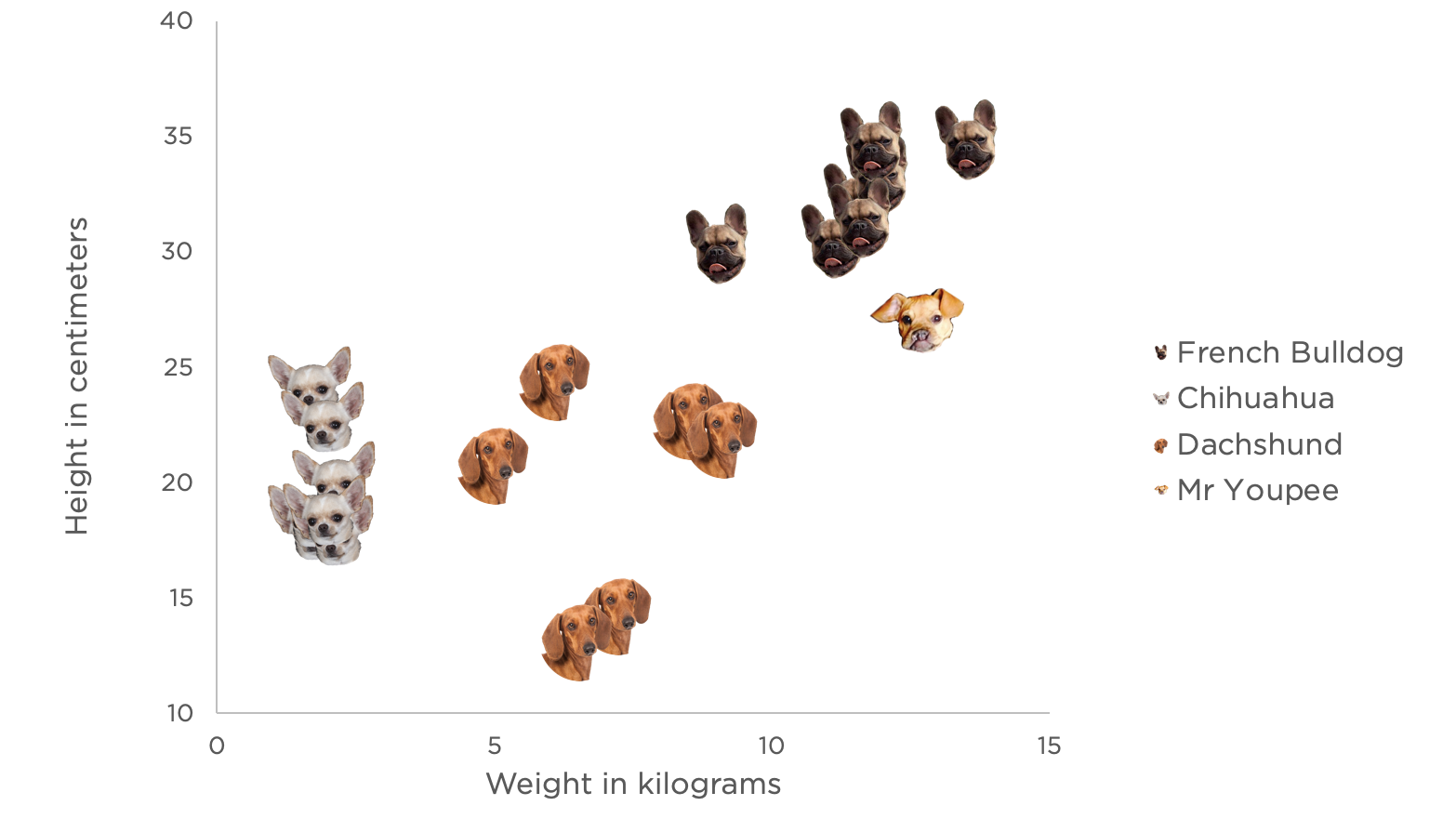

Now, let's say that I want to classify a dog of an unknown breed.

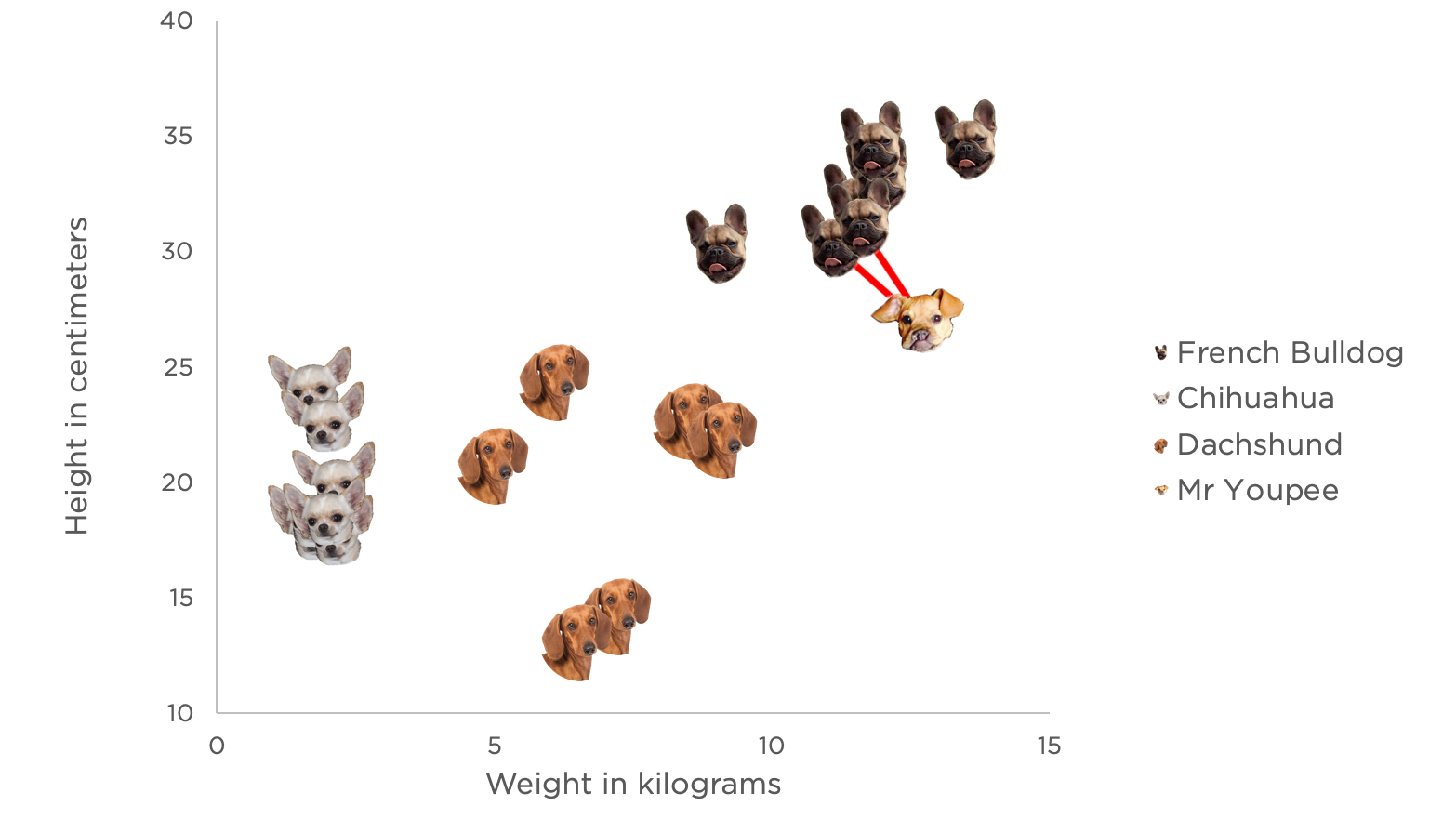

A KNN algorithm would look at the Euclidean Distance between our new example and our previous data to determine which ones are its closest neighbors. Then, by looking at the category they belong to, it can predict which breed our new dog is. When doing that, the KNN will only take into account the k closest ones. k is a number determined beforehand, hence the name k-Nearest Neighbors.

In our case, if we choose k = 2, it seems that Mr. Youpee is a French Bulldog (if we had picked a higher k value, the classifier would have also found a little bit of Dachshund)

In this example, we used a 2D graphical representation to give you a better understanding, but in reality, a KNN is sometimes looking at neighbors in a 100 dimensional space, something that our mind cannot even apprehend!

If you want to see a KNN algorithm in action, you can try this interactive demo.

KNN is a really powerful (yet simple) tool that you can use to do machine learning without even using a neural network! Now, how can we use this new tool with MobileNet?

ImageNet is an image database of nearly 15 million images ordered by categories. MobileNet classifies images with labels from the ImageNet database. MobileNets are small, low-latency, low-power models parameterized to meet the resource constraints of a variety of use cases.

Transfer Learning

Our previous example with dogs was only using two input features, height and weight. When classifying images, we soon find ourselves having to deal with millions of inputs.

An RGB image of 1000x1000 pixels has 3.000.000 dimensions (1000 * 1000 * 3 colors)

If we wanted to use a KNN for image classification, it would be almost impossible to gather enough data to represent every possible image. That's when Transfer Learning is going to save us!

As you may already know, MobileNet models are based on Convolutional Neural Networks. A CNN can quickly spot features on a complex input (sound, image, or video for example).

See how with each layer from bottom to top, it can reduce the number of values in the input.

What if we could use this ability to detect key features in an image to build a custom classifier? That's what we are going to do, thanks to MobileNet, we are going to reduce any input image to 1000 inputs (which is still a lot less than 3 million!)

Have a look at this interactive documentation to learn more about how we are going to reuse MobileNet using the .infer() function.

Back to the coding board

The good news is that we won't have to recode a KNN from scratch, as Tensorflow.js already includes a KNN Classifier.

You can find a working p5.js implementation of a KNN using MobileNet here

If you want to learn more about KNN and Transfer Learning, have a look at this 3-part video series by The Coding Train on how to build an image classifier using ml5.js, a library built on top of Tensorflow.js

Here is how we did it:

- Import Tensorflow's KNN classifier by adding a

<script>tag in your html file. See Tensorflow's GitHub to find it. - The function

knnClassifier.create();initializes and returns an instance of this classifier. Use it in yoursetup()function. - You can add a new example to your classifier by doing

myClassifier.addExample(newData, className);, newData being the result of MobileNet's.infer()function and className being a number or a string. - Finally, you can start a prediction by using

myClassifier.predictClass(newData).then(callback)

Now, let's have some fun!

Visit the J avaScript and p5.js pages to help you if you haven't done so already.

Train your KNN to detect two or more things, for example, positions of your hand, facial expressions (you smiling or not for example), you and your friends, different objects... Play with your new model and try to see:

- What is the minimum number of examples needed to get a good result?

- Does the prediction still make sense if you change the camera angle between training and predictions?

- What small details in your picture make the network "click" and switch from one category to another? Can you find a way to reliably trick it?

- Try to train your model to detect the difference between you smiling and you not smiling (or anything with your face). Does it work with someone else's face without re-training it?

By doing these little exercises, you should have experienced by yourself an overfitting and/or a biased model, whose consequences were explained in the Ethics of AI.

Quiz

Quiz

Answer the quiz to make sure you understand the main notions. Some questions may need to be looked up elsewhere through a quick Internet search!

You can answer this quiz as many times as you want, only your best score will be taken into account. Simply reload the page to get a new quiz.

The due date has expired. You can no longer answer this quiz.

PRACTICE

- Create a p5.js sketch (from scratch or using this code as a base), that implements a custom image classifier using KNN and MobileNet.

-

Train your model to detect at least three different classes